Prompt Injections, design patterns and a CaMeL

Prevalence of prompt injections and the lack of reliable solutions to it hampered the development of agentic LLMs. Most of the solutions I presented in the previous article are probabilistic in nature and can’t provide any real security guarantees. One of them stood out though. I have singled out the Design Pattern approach as the one which could, in the future, yield a more general and robust fix to the prompt injection issue.

There are some reasons to believe that this future might be starting now and this article will explore it.

Design patterns

The term “design pattern” comes from the field of the good old, regular software development which in turn got it from the architecture. It means a general solution to a commonly occurring problem. It is not a a piece of code that is used to solve an issue but more of a general way to construct your system to achieve specific goal. The same is the case with LLM design patterns. Those are ideas about how to structure your LLM-enabled system.

Some of those patterns are engineered to make the system safe from Prompt Injection attacks.

Dual LLM

The Dual LLM design pattern was proposed by Simon Willison in his blogpost in 2023. The main idea here is separation of tasks between Privileged LLM (P-LLM) and Quarantined LLM (Q-LLM).

The Privileged LLM is meant to interact only with the trusted input. This would usually mean user prompt. The P-LLM has access to tools – it could send emails, modify calendar, book a flight and so on.

The Quarantined LLM would interact with untrusted input. This means any input that could in any way contain prompt injection payload. This LLM is assumed to be compromised and as such it does not have access to tools and its output is treated as untrusted.

The security of this pattern originates in the separation of the two – unfiltered output of the Q-LLM should never find its way into the P-LLM input. This means that the Privileged LLM will never encounter any input that could be malicious and by extension is safe from prompt injection.

Interactions of the Privileged LLM with tools, users and the Quarantined LLM is handled by the Controller. It is a regular piece of software and not an LLM.

Below is an overview of how a Dual LLM would work in a scenario of a simple user query borrowed from the Willison’s article.

Event the author of this solution stated that it has multiple issues. It is however an interesting idea on which one could build a more robust prompt injection protection. It is exactly what Google’s DeepMind team did.

CaMeL

Some time ago, the DeepMind team released a paper detailing their defense mechanism called CaMeL (Capabilities for Machine Learning). It was inspired by the Willison’s Dual LLM.

The main similarity between the CaMeL and Dual LLM is in the architecture. Both utilise Quarantined LLM, Privileged LLM and the Controller. However, the authors of the CaMeL recognized some issues with the Dual LLM approach that needed to be dealt with. That’s why they expanded upon this basic concept.

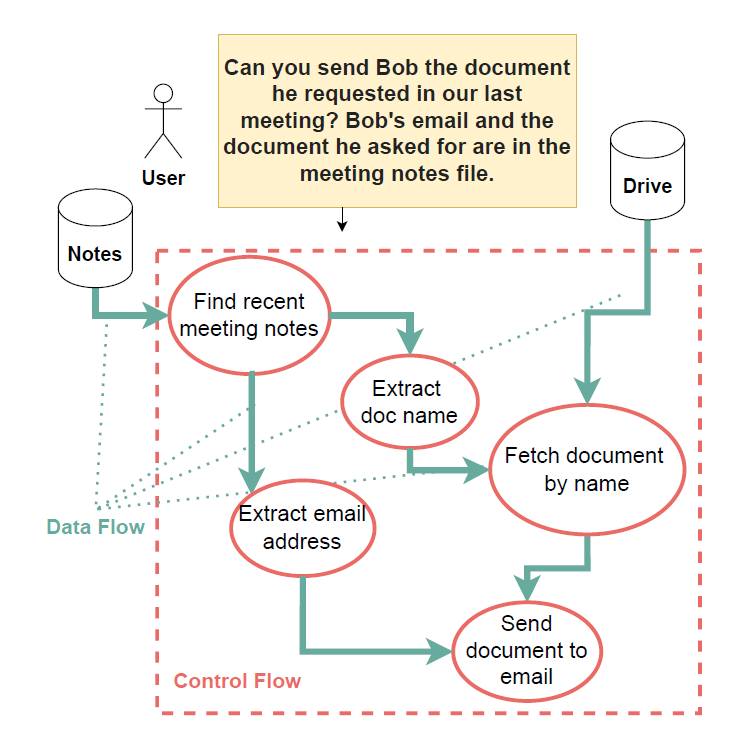

Data and control flows

To properly understand problems that are present within the Dual LLM one needs to learn about the concepts of data flow and control flow. The control flow is the “game plan” created in response to the user query. It contains steps and actions necessary to achieve the specified objective. Those might be:

- Reading data from a database.

- Summarizing some text or extracting data from it.

- Executing code.

- Reading or sending an email.

The data flow is about how the data used in all those steps is processed and transformed.

The graph below was borrowed from the original paper as it conveys the difference between the two quite well:

To secure the system from the threat of prompt injection one needs to secure both of the flows. However, the Dual LLM secures only the control flow which is handled by the Privileged LLM. The data flow is influenced by the Quarantined LLM without any restrictions. This means that an attacker cannot influence what actions will be taken and which tools will be used, but they can impact the arguments used in those actions and tools. They cannot decide to send an email, but can modify its recipient. It is, quite obviously, bad.

CaMeL design

To remediate it, CaMeL utilises capabilities. Capability is a piece of metadata that is assigned to a value. It stores information about its origin (where it comes from, which function created it) and permissions (who is allowed to read or modify it).

Below is an overview of this system, once again borrowed from the original paper:

The CaMeL works by first using the Privileged LLM to translate the user query into a pseudo-Python code that expresses the control flow. This code is then executed by the CaMeL interpreter. This part helps secure the control flow. Then, the CaMeL interpreter utilises the Quarantined LLM to process the untrusted data while maintaining the data flow graph and capabilities associated with variables. Based on this data it enforces security policies. Those are expressed as a Python code. This is the part that protects the data flow.

For example, even if the attacker could modify the recipient of a secret email by manipulating the Q-LLM, the CaMeL interpreter would not send it. This is because the attacker’s address is not listed as an allowed recipient in the capabilities.

It is not all roses

The CaMeL is not intended to protect against every possible attack. Specifically, it cannot protect against text-to-text attacks. An example would be a prompt injection that instructs the Q-LLM to incorrectly summarize an email. The data flow graph created by CaMeL can be however utilised in order to present the user with a source of data. This could greatly help with spotting the attack. The CaMeL is also not a fully autonomous system that does not require any user action. In a realistic implementation the user would be sometimes presented with a confirmation dialog to allow some actions or clarify them to the system.

But there are some freebies

The architectural divide into the Privileged and Quarantined LLMs yields some additional, unintended benefits. While the planning phase requires a sophisticated, probably big and expensive LLM, other tasks may be performed by a way less powerful model. It means that while the Privileged LLM probably needs to be outsourced to an LLM provider, the Quarantined LLM might be run locally. And, as the P-LLM is never exposed to the untrusted input, this input will also not be exposed to the LLM provider. This is an unexpected privacy benefit as the data extracted from emails or databases can be processed by a local LLM and by extension stay within your organisation’s network. The external provider would only see users’ queries.

While it is not a silver bullet for every possible security concern related to LLM usage, the CaMeL seems like a robust solution to the prompt injection attacks aiming at the control and data flows.

Summary

DeepMind team did a great job in expanding upon Willison’s previous work. In my opinion, the CaMeL is a big step in a right direction in the LLM security field. It reminds about the good old security engineering principles that are easy to forget in the age of AI solutions used to patch up other AI solutions. Quite refreshing to be honest.

Thanks for reading and I already invite you to next articles which, I hope, will arrive quite soon.

The featured image is by the user Mariakray from Pixabay.