Desktop applications on Windows, Linux, and macOS often handle sensitive information, such as passwords and API keys. While developers may think this data is cleared after use, it usually remains in memory longer than expected. This persistent memory issue is a subtle cross-platform vulnerability that even seasoned developers can overlook.

In this article, I will explain why and when this is a threat, along with possible mitigations. Enjoy!

How Operating Systems Handle Memory

The system uses RAM to store data during program execution. This data is most of the time not immediately cleared after use. The data persists until the system overwrites that memory region (or when the power is off). It means sensitive information can persist in RAM, making it accessible to anyone with sufficient privileges to inspect memory.

- Windows: Memory can be accessed via debugging interfaces, which are notoriously permissive.

- Linux: Same as above + /proc/<pid>/mem, but there are restrictions, so only root can read memory.

- macOS: There is a Hardened Runtime, which makes things a bit more secure (if the app uses it).

However, these are merely mitigations and not the correct method for resolving this issue.

Developers Responsibility

The most important thing to note is that none of the systems clears sensitive data from the app’s memory region. It is entirely the developers’ responsibility to secure it. High-level languages and their frameworks offer various APIs for erasing sensitive data from memory. It is important to remember that even if a variable goes out of scope or is deallocated, its contents may persist until overwritten. This is why such APIs exist. Furthermore, even when the app is written in the C language, compilers might optimize away attempts to clear memory – see Locking the Vault: The Risks of Memory Data Residue.

Below are examples of how to secure sensitive data in various languages. As we will find out, sometimes it is not possible.

C

The program below allocates memory for a password and clears it after use, ensuring that no data remains in memory:

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <unistd.h>

// Securely wipe memory to prevent sensitive data recovery

static void secure_wipe(void *target, size_t bytes) {

volatile unsigned char *ptr = target;

while (bytes--) *ptr++ = 0;

}

void demo_clear_memory() {

const size_t BUFFER_SIZE = 32;

char *password_buffer = malloc(BUFFER_SIZE);

if (!password_buffer) return;

// Get password securely without echo

const char *user_input = getpass("Password: ");

if (user_input) {

// Ensure password fits in buffer with room for null

size_t password_length = strlen(user_input) % (BUFFER_SIZE - 1);

// Copy password to secured buffer

memcpy(password_buffer, user_input, password_length);

password_buffer[password_length] = 0;

// Wipe original input to prevent leaks

secure_wipe((void*)user_input, password_length);

// Show masked password

printf("Stored: %.*s\n", (int)password_length, "********");

}

// Clean up sensitive data

secure_wipe(password_buffer, BUFFER_SIZE);

free(password_buffer);

printf("Cleared. Enter to exit...");

getchar();

}

int main() {

demo_clear_memory();

return 0;

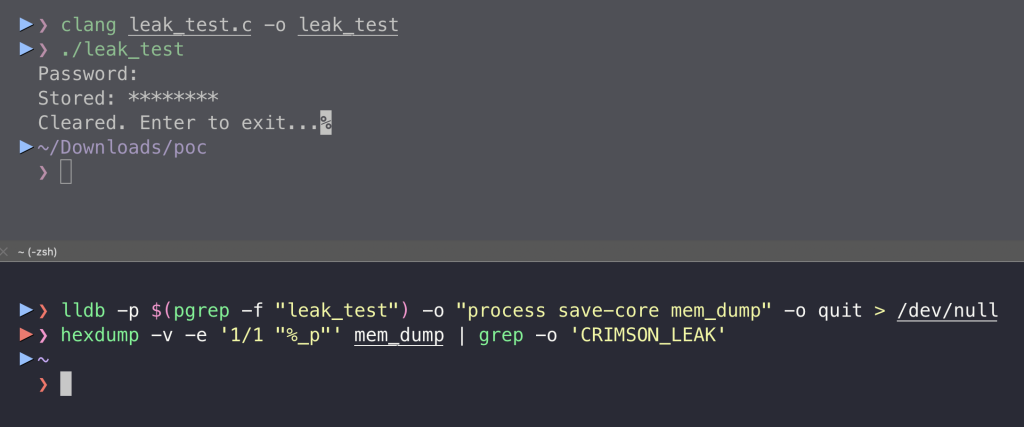

}When compiled and run, we can observe that the password was cleared from memory:

It is an example of how it should work, but as much as I love C, who writes apps in C these days?

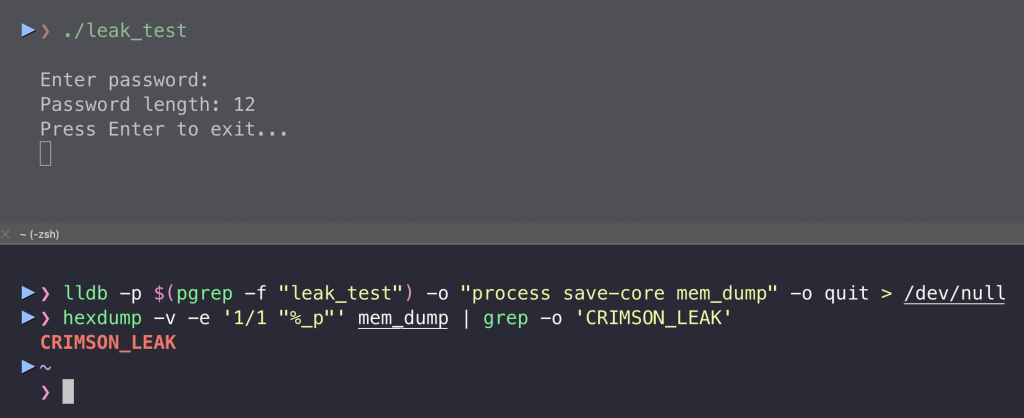

C#

In C#, we can use SecureString as the sensitive data container. This approach encrypts the password in memory and zeros out the memory when disposed:

using System.Security;

class Program

{

static void Main()

{

Console.Write("Enter password: ");

using (SecureString securePwd = new SecureString())

{

ConsoleKeyInfo key;

while ((key = Console.ReadKey(true)).Key != ConsoleKey.Enter)

{

securePwd.AppendChar(key.KeyChar);

Console.Write("*");

}

Console.WriteLine($"\nPassword length: {securePwd.Length}");

Console.WriteLine("Press Enter to exit...");

Console.ReadLine();

}

}

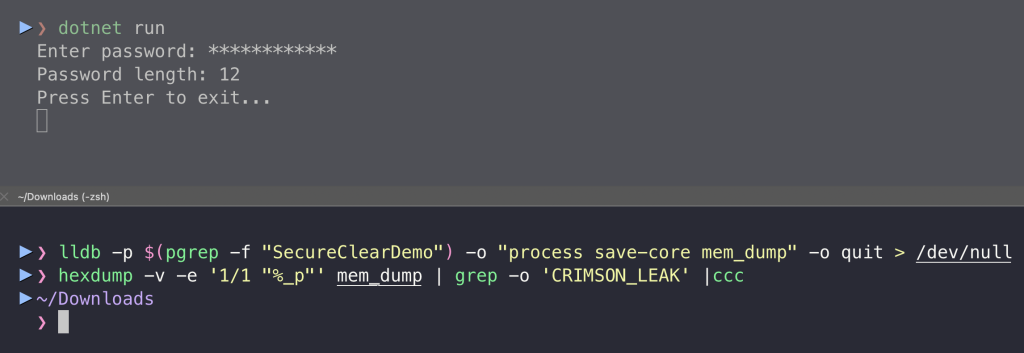

}As we can see in this case, the password also could not be found in the memory dump:

The code is also shorter.

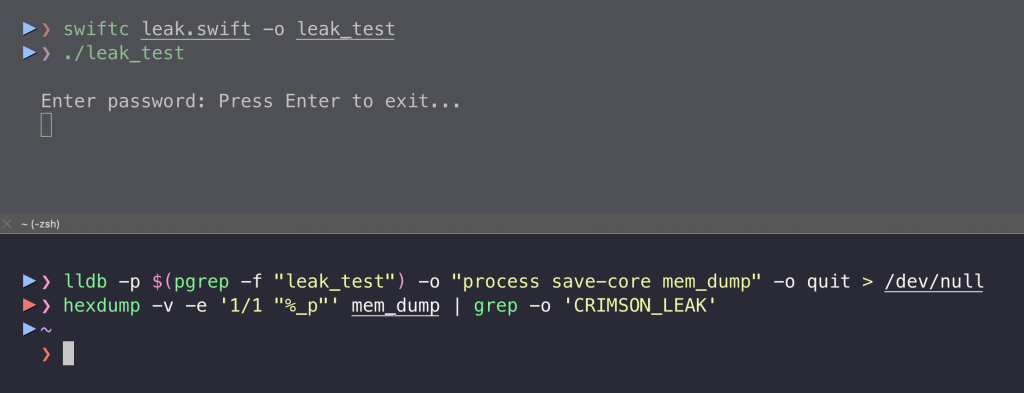

Swift

Swift does not provide a built-in secure memory abstraction like C#. There is no ready-to-use API call, and there is an issue even when we try to clear the memory buffer directly with memset:

import Foundation

print("Enter password: ", terminator: "")

if let cString = getpass("") {

let password = String(cString: cString)

print("\nPassword length: \(password.count)")

// Zero out the buffer after use

memset(UnsafeMutableRawPointer(mutating: cString), 0, strlen(cString))

print("Press Enter to exit...")

_ = readLine()

}As shown below, the password was leaked to memory. It is because of String(cString: cString). If we convert data to a String, the data may be copied elsewhere and not erased properly:

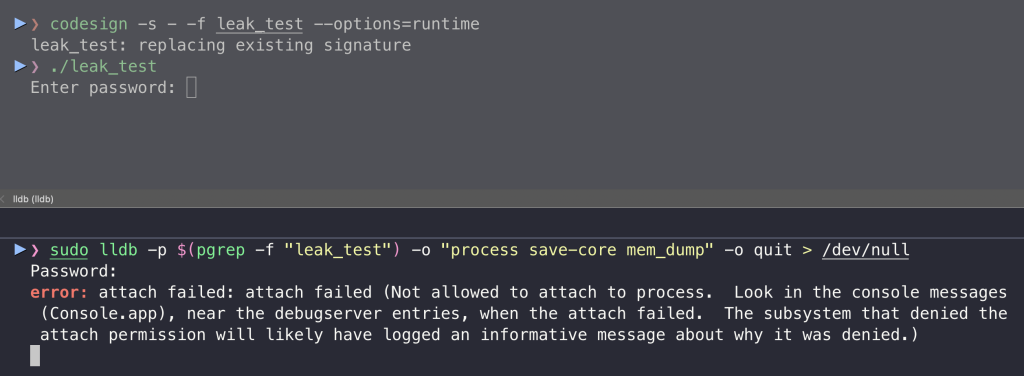

I found a workaround for that, shown below, where I keep the password in a manually managed buffer:

import Foundation

print("Enter password: ", terminator: "")

if let cString = getpass("") {

let length = strlen(cString)

// Allocate a buffer

let buffer = UnsafeMutablePointer<CChar>.allocate(capacity: length + 1)

buffer.initialize(from: cString, count: length + 1)

// ... use `buffer` for authentication, etc. ...

// Zero out the buffer after use

memset(buffer, 0, length + 1)

buffer.deallocate()

// Also zero out the original cString from getpass

memset(UnsafeMutableRawPointer(mutating: cString), 0, length)

print("Press Enter to exit...")

_ = readLine()

}As shown below, the sensitive data has gone from memory:

Strangely, there is no API for that. If you want a reusable “secure string” type, you can wrap a C buffer in a Swift class and manage zeroing in deinit.

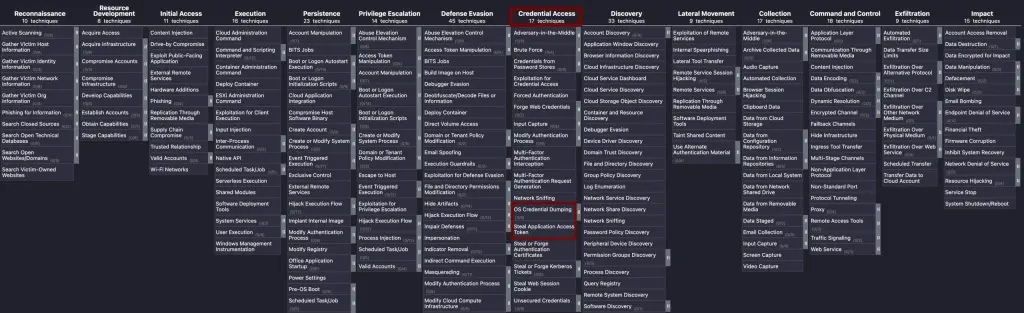

Threat Model

For reference, I will use the MITRE ATT&CK matrix to put it into a broader context. This risk occurs only when malware has gained access to the system. The technique is OS Credential Dumping:

Exploitation typically involves reading process memory through APIs or by attaching a debugger. In addition, we can read in CWE-316: Cleartext Storage of Sensitive Information in Memory that sensitive data can be saved to a disk in the form of core dumps or “might be inadvertently exposed to attackers due to another weakness.” – a kind of open ending for security researchers.

Depending on the OS, some built-in mitigations can prevent harvesting.

Mitigations

MacOS offers default protection against the extraction of sensitive data left in memory through a hardened runtime feature that prevents the attachment of debuggers. However, it works on a per-app basis rather than system-wide, and developers do not always enable the hardened runtime for their apps. Furthermore, this protection is not foolproof and can be bypassed or misconfigured.

On Linux, ptrace restrictions are enabled system-wide by default. However, they do not prevent the root from attaching to any process, unlike the hardened runtime. Moreover, if there is a parent-child relationship between processes, then they can read each other’s memory. Root can also remove this restriction system-wide:

echo 0 | sudo tee /proc/sys/kernel/yama/ptrace_scopeCode language: PHP (php)

Windows just being Windows. Apps running under the same user can access each other’s memory.

Keeper Forcefield

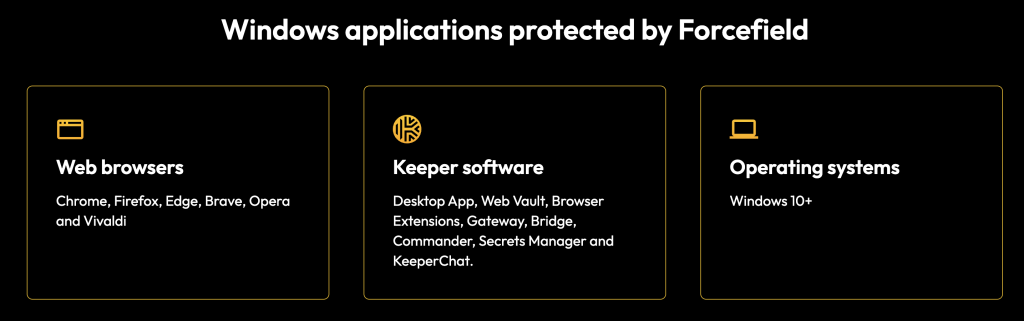

I recently discovered this solution, and I find it excellent. Forcefield fills a real gap in the Windows security model. It is a kernel driver for low-level process protection. It implements custom user-mode hooks to intercept and deny access to protected APIs, hardening memory protection for applications.

Still, even if this is a solid solution, it is only a mitigation and it works only for some apps.

Conclusion

Persistent storage of sensitive data in application memory poses a security risk for desktop software across major operating systems. While OS-level protections, such as macOS’s Hardened Runtime and Linux’s ptrace restrictions, exist, they offer limited security. Developers are primarily responsible for addressing this vulnerability. However, as seen in the Swift example, high-level languages sometimes lack standardized APIs for securely clearing memory. To mitigate this issue, programming languages should evolve with built-in mechanisms for secure memory handling, such as guaranteed zeroing functions and secure data containers that sanitize their contents.

Until these features become standard, sensitive data will remain at risk, relying heavily on developers’ practices, which can be inconsistent and prone to error. I issued a proposal on Swift forum.